Project Overview

We created this game for one of our game development courses with three of my classmates, Steppe Schoolkate, Pia Schröter, and Arthur Stolz. This project aimed to create a tactical 3D top-down RTS shooter in Unity, with inspiration for the idea originating from the popular Door Kickers franchise.

You play as a commander observing the mission area through a thermal camera of a military drone circling the area of operations. You command a small squad of four soldiers using the standard RTS controls. You are tasked with various objectives within the level, and completing all objectives will win the game.However, you are not alone in this area. A handful of highly aggressive, lethal, intelligent, alien-like specimens roam the area, which are out there to kill your men. They try to reduce their thermal silhouette and thus their visibility to the player by hiding among trees and trying to catch the soldiers off-guard to kill them instantly. The specimens are not invincible, but they are hard to kill. Moving too fast can leave your men vulnerable to attacks, but moving too slow leaves you exposed to possible attacks. The soldiers automatically open fire when they spot a specimen, but friendly fire incidents can occur.

This project's design required an intelligent AI Behaviour for the specimen to introduce a real challenge for the player. I was highly interested in delving deeper into the world of complex game AI Behaviors, so I took the designing and development of this AI behaviour as my main responsibility in this project. The following sections will go deeper into the process of creating this system.

Behaviour Tree System

The first decision I had to make was to agree on a method to use for implementing the system. Due to the need for rather complex overall behaviour, with several complex behaviour states, each consisting of several sub-behaviour states of its own, the idea of using a state machine was discarded, with the worry that the system would quickly become too messy. Instead, I decided to use behaviour trees for their ease of expansion.

However, this brought with it the first big challenge of this project. The engine used for developing this project was Unity, which has no built-in method for creating Behaviour Trees. This left me with a choice: either buy a very powerful and well-maintained add-on on the Unity Store for 80€, which has all the functionality I could possibly need, or create the system from scratch in C#. I chose the latter, as I saw it as an excellent opportunity to learn the inner workings of behaviour trees.

Fortunately, plenty of resources were available online, and an inheritance-based implementation of a behaviour tree system was quickly realized. It contains the standard composite nodes (parallel, sequence, selector), the standard decorator nodes (inverter, repeater, succeeder), and the standard leaf nodes (action, condition). Furthermore, each tree also includes a blackboard, a dictionary of shared variables each node in the tree can read and write to.

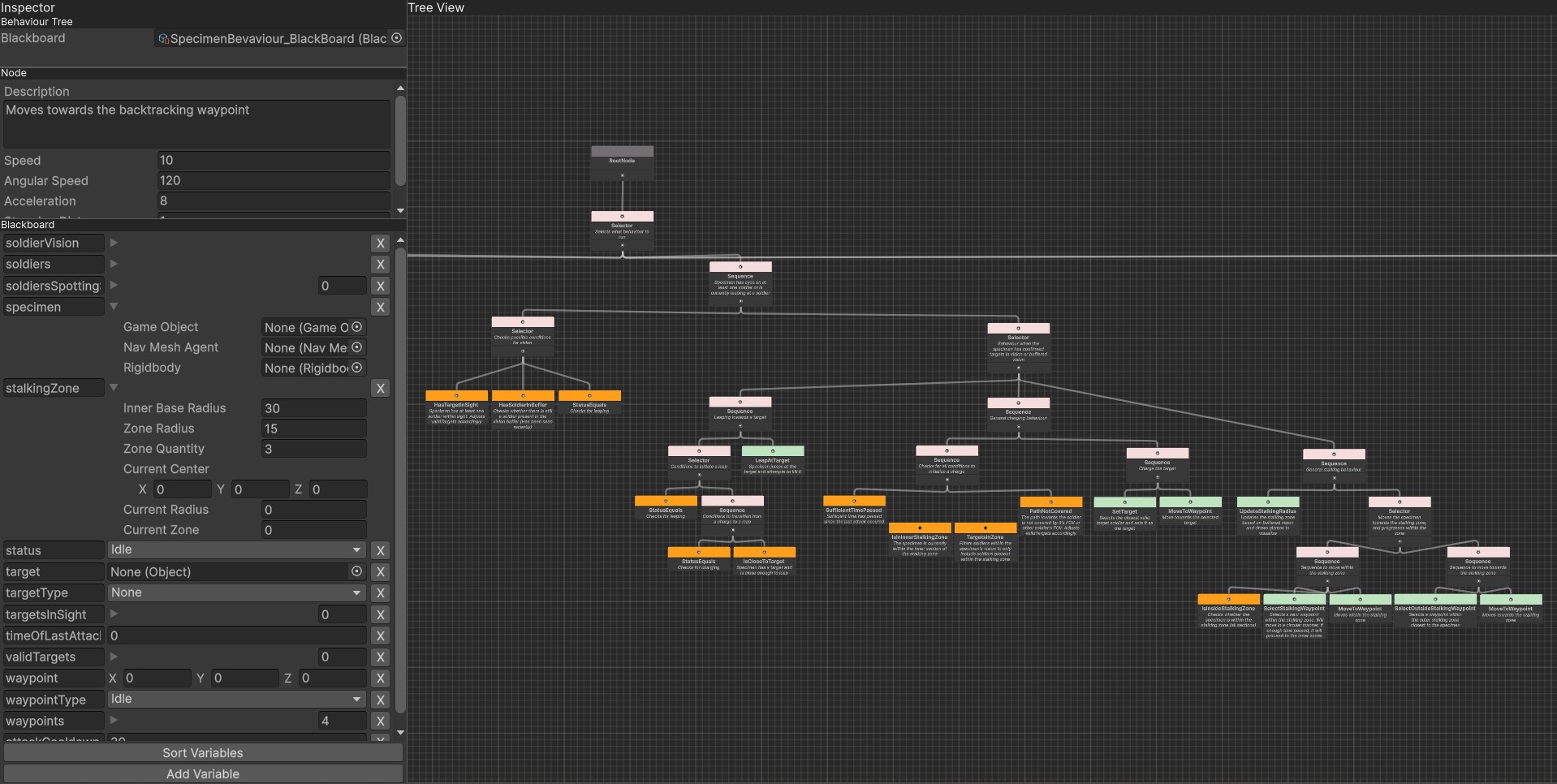

At this point, the core of the behaviour tree system is in place, and in theory, it can be used to create behaviour trees now. However, it could be more practical, as all nodes must be initialized and have children assigned through code. This flaw makes it extremely hard to implement and visualize the tree's structure and makes debugging the execution paths and sub-behaviours quite tedious. Next on the list was developing an editor in which nodes can be placed and connected. This task was achieved by utilizing the EditorWindow module to create a custom editor and the experimental GraphView module to create the view in which new nodes can be added and connections can be made to children. This addition significantly improved the workflow of creating behaviour trees and made it much easier to visualize the tree's structure. Here is how the editor looks like showing a part of the specimen behaviour tree:

The editing of a behaviour tree is much more streamlined now, but debugging is still challenging. My next step was to update the editor during runtime and show which node is currently running. I dislike not knowing what is happening internally in a system (a black box) and blame it on my AI background. Thus, I wanted the editor to show as much real-time information at runtime as possible. So, not only does the editor now display the current execution path from root to node, as well as which nodes have been evaluated but failed, but it also showcases the entire contents of the blackboard. This addition did require some tinkering with getting generic data to show up in an editor window, which required some runtime serializing/deserializing. Still, it drastically improves the ability to debug the behaviours by seeing what is going on under the hood and what the tree is basing its decisions on. An example of a runtime view of the graph can be seen here:

Specimen Behaviour

One primary source of inspiration for the AI behaviours for the specimen is the 2014 horror game Alien Isolation by Creative Assembly. The AI in this game gives the player a strong feeling of dread, knowing they are up against something invincible and likely more intelligent than them. We tried to achieve this experience for our players, except our specimen is just hard to kill and not invincible. To accomplish this, I decided to stick to the general AI design that Alien Isolation also uses. Their system contains two distinct parts: a macro behaviour system and a micro behaviour system. The macro system can be seen as a manager, managing the alien and telling it when to go somewhere and when to back off. The micro system is the behaviour system of the alien itself and is entirely based on senses. It relies on visual, auditorial and tactile sensors to find and hunt the player. At no circumstance will the alien itself know the location and intention of the player and only rely on the manager to give it points of interest.

To try and achieve the same experience of fear and dread, I decided to stick to a similar dual-level system. The higher-level system would help the specimen find a target, and the lower-level system would hunt the target, relying on its senses. Unfortunately, due to this project's narrow timeframe, some significant cuts had to be made to the behaviour. As a starter, the higher-level system was very straightforward and non-intelligent. Its sole purpose was to send the specimen to a randomly picked point of interest from a predefined list. Once the specimen arrived at the point of interest, it would select a new one and traverse to that location. This cycle is repeated until the specimen finds itself a target. This system was implemented in this way in an attempt to create a basic high-level patrolling system that would help the specimen find targets. This system, although rudimentary, did work as intended. To aid in finding a target consistently and swiftly, the specimen was given powerful senses, with an eyesight covering about 30% of the level.

Unfortunately, significant cuts also had to be made in the much more complex micro-level behaviour system. As a starter, the specimen was limited to visual senses only. Auditorial senses were initially planned but did not make it in on time in the final cut. However, purely visual senses were enough to create an interesting behavioural pattern. The behaviour of the specimen consisted of 5 high-level behaviours: stalking, charging/attacking, evading, fleeing, and backtracking.

Stalking is a behaviour that the specimen will display when it has a clear view of a target but is still too far away to initialize a charge. Here, the specimen tries to encircle the target and creep closer towards the target. The specimen needs to stay for a sufficient amount of time within this 'stalking zone' before it can advance towards the inner area of the zone. Once the specimen enters this inner zone and has a view of the target, it can engage the charging behaviour. Charging involves the specimen moving towards the target at a higher velocity. The specimen will only charge when it finds a valid and relatively safe path towards its target; if the straight path from the specimen to the current target is within view of any soldiers, it is discarded, as it would likely result in the specimen's death. After engaging in a charge, the specimen can still abandon the charge if the path becomes in view of soldiers. Once the specimen is close enough, it will leap at the target, throwing itself at the target at a high velocity. This decision is final, and the specimen cannot abort this behaviour. If the specimen successfully leaps onto a target, it is instantly killed. After this, the specimen initializes the fleeing behaviour. Here, the specimen quickly leaves the attack location and moves to a random point of interest at a high speed. This behaviour is both for the specimen to avoid being killed after the attack and to give the player time to recover. Backtracking allows the specimen to move back to the last known position of a target. This behaviour enables the specimen to quickly find a target without going through the entire higher-level patrolling sequence. This behaviour can trigger after fleeing an attack and a general prolonged loss of sight of targets. Lastly, the evading behaviour allows a specimen to stay out of the line of fire of a target without moving too far away from the target. If, at any point, the specimen is within the line of fire of a target, it will move to the closest position that is not in the line of fire of a soldier.

Furthermore, by playing with costs on the navigational mesh, the specimen remained quite well hidden from the thermal camera. By lowering the cost of moving in a highly covered area, such as forests or buildings, and increasing the cost of moving in open terrain, the specimen succeeded well in hiding its heat signature from the player, making it much harder to keep track of their locations and increasing the fear factor of players.

The enemy's initial design goals and requirements were met with this system consisting of those, as mentioned above, 5 high-level behaviours paired with the macro guidance system. Although some constraints were put in place, the overall result is convincing. It is experienced by players as smart and intelligent and succeeded in being a real lethal threat to the player.

Lesson Learned

I learned a tremendous amount from this project. Not only was this project the first time I dove into complex game AI, but I didn't choose the easiest path either. Instead of just implementing the behaviours, I decided to build the entire core and creation pipeline of the system from scratch. In hindsight, regarding the quality of the final behaviour, this choice was not optimal. The development of the system took around 1/3rd of the total time spent on the project. This time could've been spent improving the quality of the AI. On the other hand, however, I learned a tremendous amount from implementing it from scratch. It made me fully understand the underlying workings of behaviour trees, which helped tremendously in developing the final AI and should help future implementations, regardless of the editor/engine it will be made in.

This project helped me explore the various frameworks for creating game AI and understand their respective perks and flaws. It also drastically improved my general understanding of behaviour trees and various designs and patterns used in popular games.

If I were to redo this project, I would definitely change some of the approaches I took. First of all, thorough testing of the individual behaviours I created was not a priority for this project, which I definitely regretted towards the end of the project. Some behaviours worked very well when I tested them in a basic static testing environment. However, when these behaviours were then used in the final complex scene, they did not work as well, which was to be expected. Proper, thorough testing would have prevented a lot of headaches and missed sleep towards the end of this project. Furthermore, I would spend more time thoroughly planning the behaviours and judging how well they would be paired with each other. This approach would've saved me a lot of unnecessary rewriting and scrapping of implemented behaviours

Tools&Skills Used

See More

Please note that the repository only contains the implementation of the behaviour tree system, including the editor.

Github Repository