Project Overview

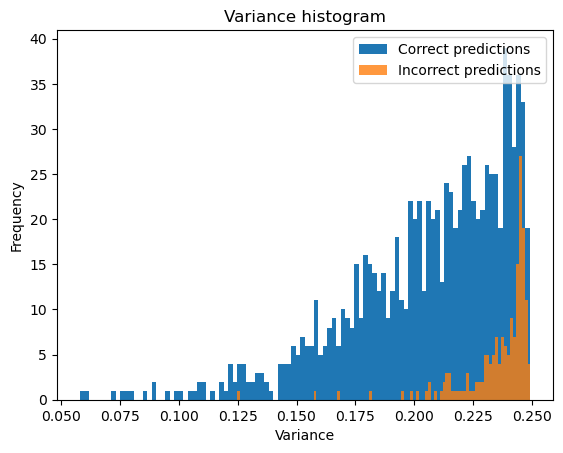

Accurate estimation of uncertainty plays a pivotal role in enhancing the reliability and interpretability of predictive models. This paper builds upon previous methods by proposing a novel approach that not only quantifies the uncertainty with the model's prediction but also traces the sources of this uncertainty using a method from a gradient-based interpretability method. In this study, an existing neural network architecture designed to classify electroencephalogram signals is modified by incorporating an additional output head for predicting uncertainty. The contribution of features to the uncertainty prediction can be calculated, ultimately pointing towards the source of uncertainty. The proposed approach is evaluated on a dataset of Error-Related Potentials. To test this method's effectiveness, the signals have been modified by introducing artificial noise in specific regions to challenge the prediction and localization of the noise. The results show that the modified model is capable of predicting the uncertainty associated with the classification. However, the tracing of sources of uncertainty proves to be challenging despite the succesful uncertainty estimation, and the exact sources of uncertainty remain elusive.